Introduction to DeepSeek V3

A few days ago, OpenAI released a preview of their latest model, the o three. This development has sparked discussions about its potential to be considered Artificial General Intelligence (AGI), marking a significant advancement in AI technology. In this video, we explore the capabilities of DeepSeek V3, a model that stands out in its own right.

So a few days ago, OpenAI dropped their o three model, or at least the preview of what it can do.

The video humorously references a tweet by McKay Wrigley, responding to Andre Karpathy, suggesting the recreation of OpenAI’s o three model in just 75 minutes with $20 of compute. While this is a playful exaggeration, it highlights the excitement and curiosity surrounding these advancements.

DeepSeek V3 is introduced as a powerful model, promising to deliver impressive results in the AI landscape.

DeepSeek V3: Performance and Efficiency

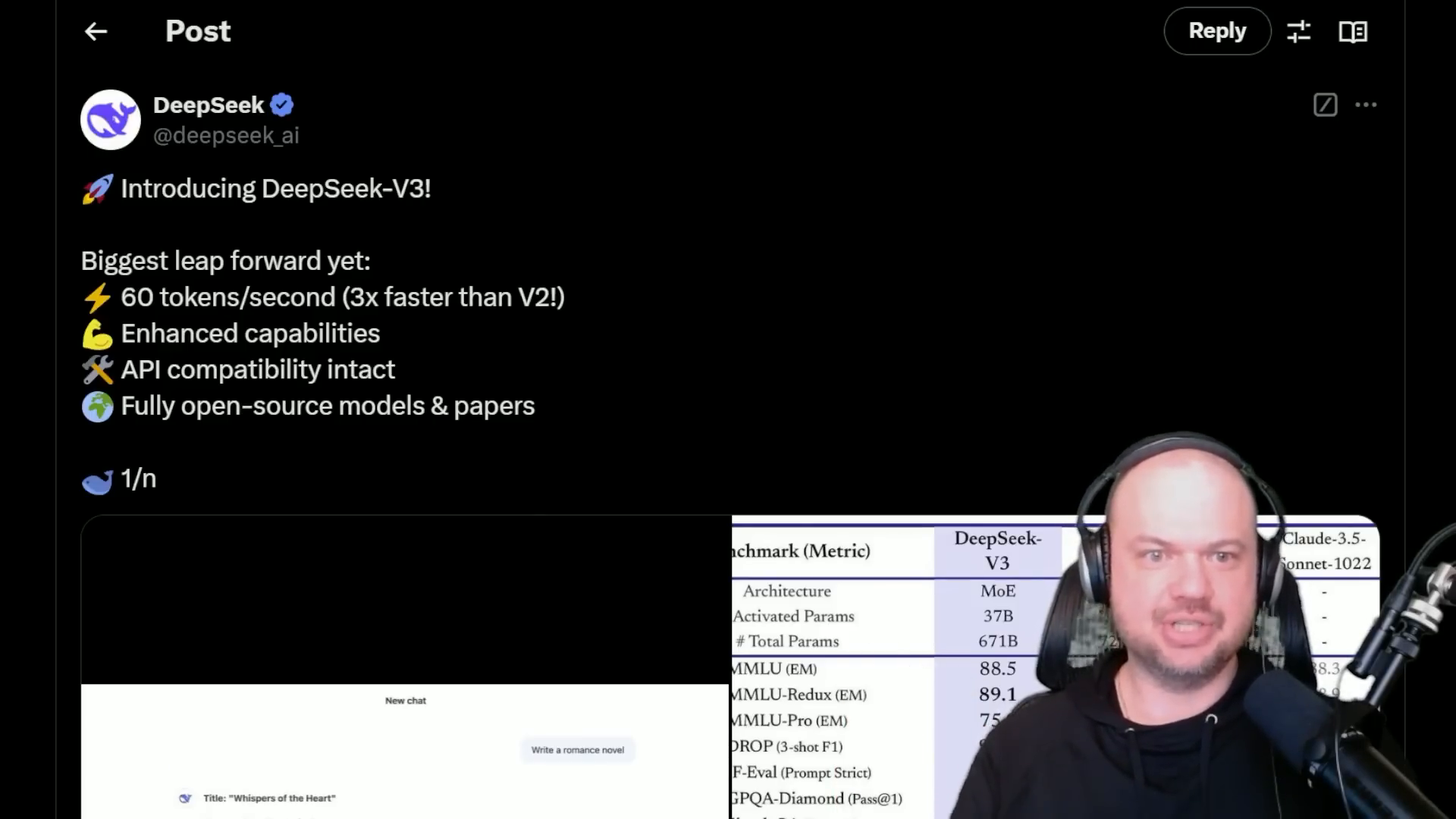

DeepSeek V3 has emerged as a highly efficient and powerful model in the realm of open-source AI. Known for its speed and cost-effectiveness, it significantly outperforms other open-source models. The model is fully open-source, making it accessible and affordable.

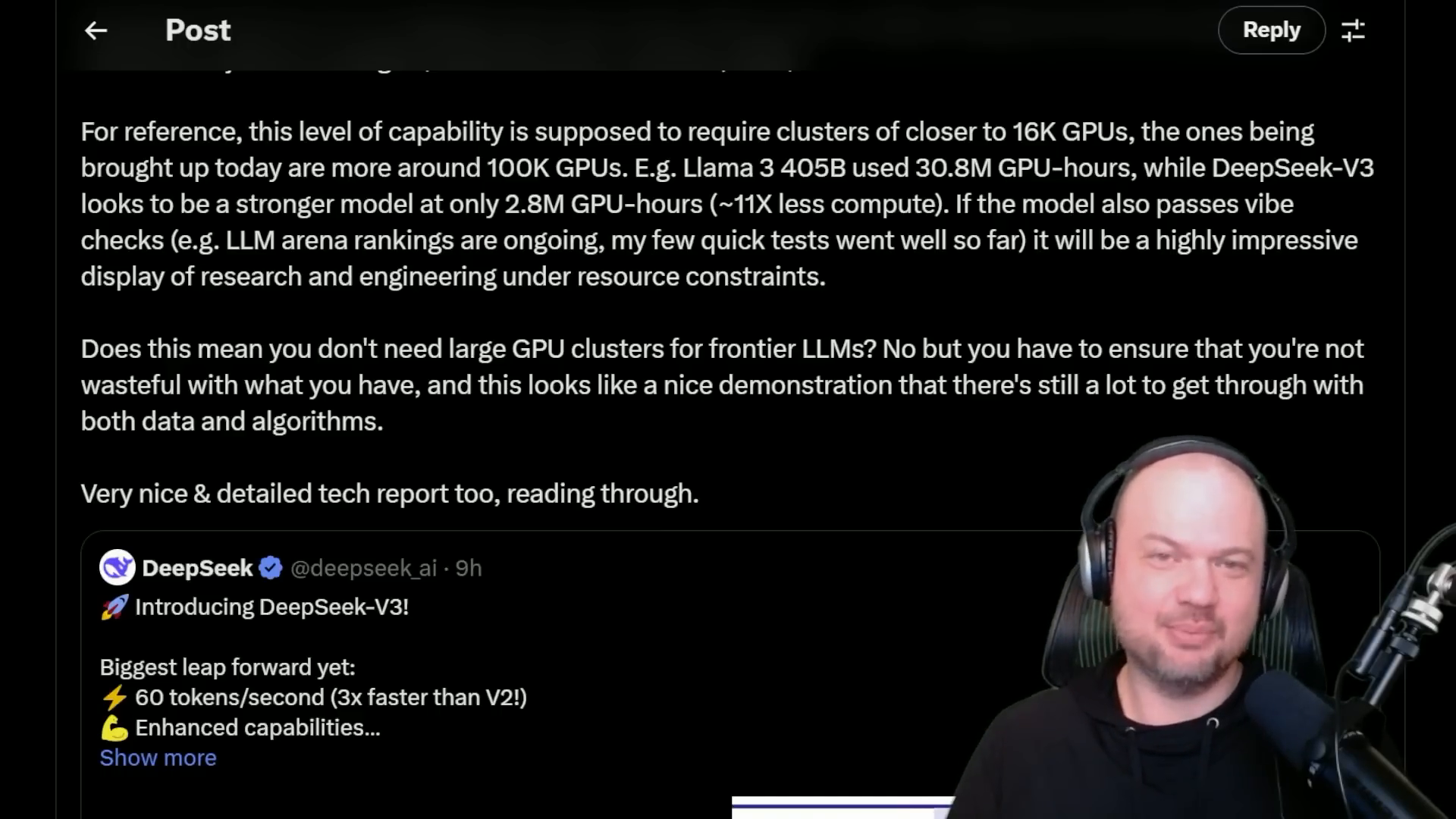

A notable mention by Andrej Karpathy highlights DeepSeek V3’s impressive performance. Despite being developed by a Chinese AI company on a limited budget, it has achieved frontier-grade capabilities. The model was trained using only 2,048 GPUs over two months, costing around six million dollars. This is a stark contrast to the typical requirement of clusters with up to 16,000 GPUs for similar capabilities.

In comparison, other large language models, such as LAMA 3, which has 405 billion parameters, required 30 million GPU hours. DeepSeek V3, however, achieves superior performance with just 2.8 GPU hours, making it eleven times more compute-efficient.

“DeepSeek v three, this Chinese release that we’re talking about now, looks to be a stronger model at only two point eight GPU hours.”

If DeepSeek V3 continues to perform well in various tests, including large language model rankings and chatbot arenas, it will stand as a testament to exceptional research and engineering under resource constraints.

The Impact of Open Source AI

The ongoing AI race between the United States and China highlights the significant impact of open source AI on global technology development. Despite efforts by the United States to control the export of powerful AI chips to China, these measures have not been as effective as anticipated. This has led to a scarcity of resources, which was expected to hinder China’s ability to develop top-tier AI models. However, the reality seems different.

“We’ve talked about how is open source AI dangerous? Should it be outlawed?”

The export controls on NVIDIA GPUs and other advanced AI chips were intended to slow down AI advancements in regions like China. However, these controls appear to be less effective, as the development of AI models continues to progress rapidly. This situation raises questions about the effectiveness of such regulations and whether they can truly limit the spread and development of AI technologies.

In Europe, heavy-handed regulations have also been implemented, slowing down the public’s access to the latest AI models. This has sparked debates about the balance between regulation and innovation, with some advocating for a complete pause in AI development.

“China’s creating these models and publishing them and making them open source.”

The open source nature of many AI models means that knowledge and technology are more accessible than ever. This democratization of AI development allows for rapid advancements without the need for massive resources, challenging the notion that only well-funded entities can lead in AI innovation.

The discussion around open source AI continues to evolve, with comparisons being drawn between open source and proprietary models. The role of open source in AI development is crucial, as it fosters innovation and collaboration across borders, challenging traditional models of technological advancement.

Technical Insights into DeepSeek V3

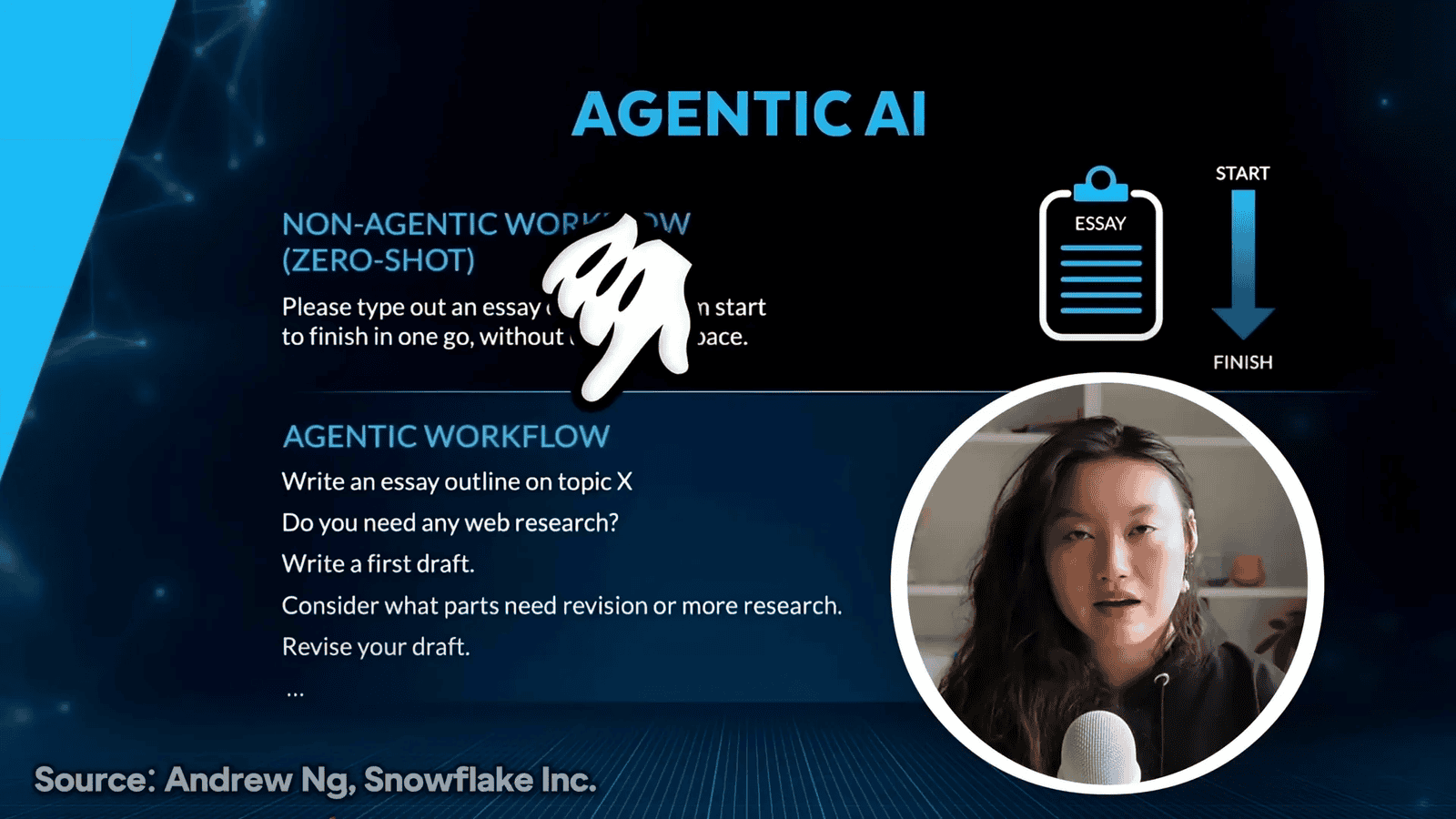

DeepSeek V3 represents a significant advancement in AI model architecture and training efficiency. One of the standout features of DeepSeek V3 is its use of the Mixture of Experts (MOE) architecture. This approach involves a collection of smaller models, known as experts, which the system can call upon depending on the specific expertise required to answer a question. This design allows for more efficient and targeted processing, enhancing the model’s overall performance.

They use the MOE, mixture of experts, kind of architecture.

The MOE architecture not only improves the model’s ability to handle diverse tasks but also significantly enhances training efficiency. By overcoming communication bottlenecks in cross-node MOE training, DeepSeek V3 achieves nearly full computation-communication overlap. This advancement reduces training costs and allows for scaling up the model size without additional overhead.

This significantly enhances our training efficiency and reduces the training costs.

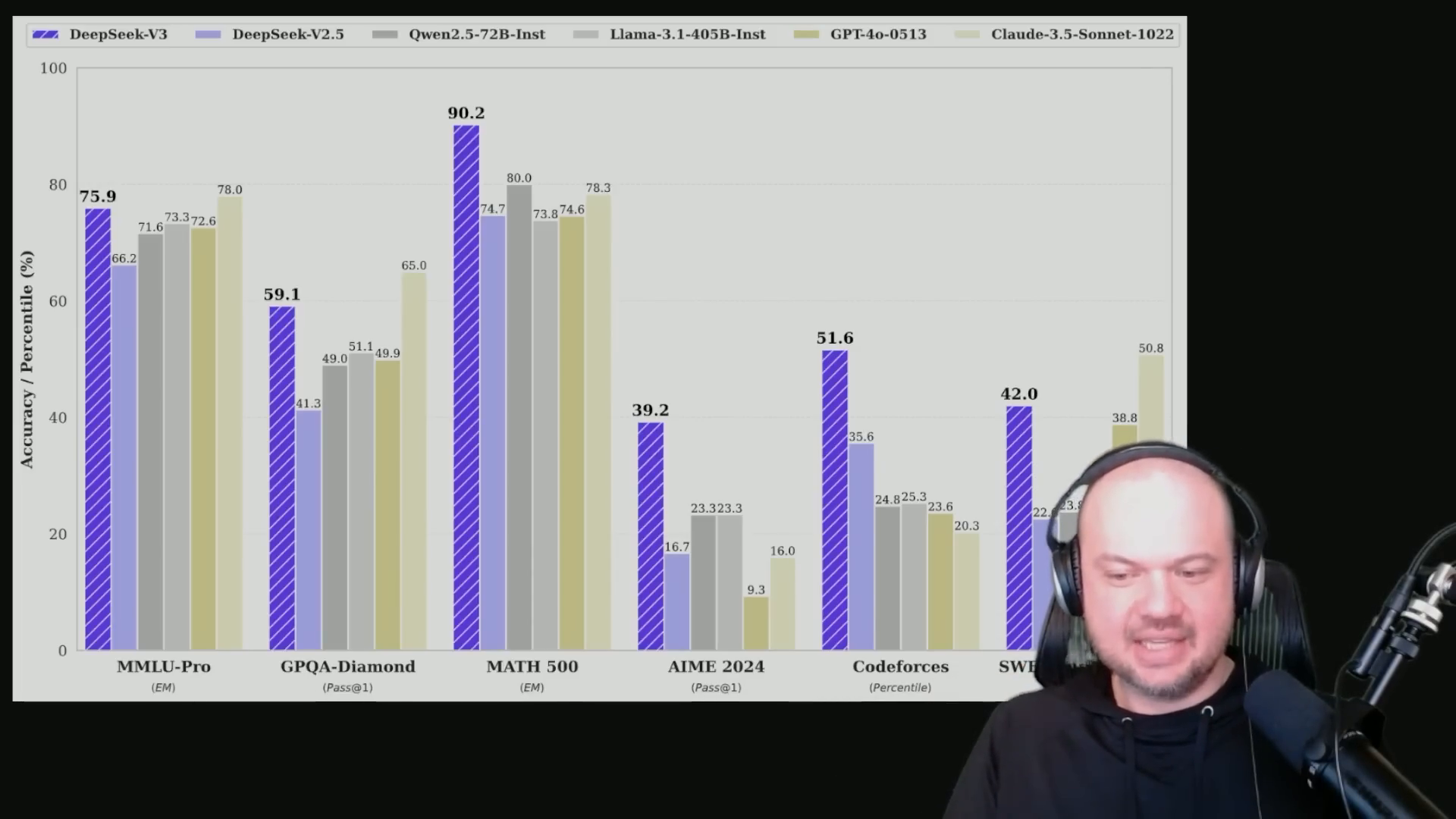

In terms of performance, DeepSeek V3 outperforms many other models, including its predecessor DeepSeek V2.5, and other well-known models like Llama 3.1 and GPT-4o. The model excels in various benchmarks such as MMLU-Pro, GPQA-Diamond, and Codeforces, demonstrating its superior capabilities.

The training process of DeepSeek V3 is noted for its stability, with no irrecoverable loss spikes or rollbacks required. This stability, combined with efficient pretraining strategies, positions DeepSeek V3 as a leading model in the AI landscape, capable of scaling efficiently and performing complex tasks with high accuracy.

DeepSeek V3 in Practice: Coding and Testing

In the rapidly evolving landscape of AI, breakthroughs often lead to a cascade of advancements across the industry. This phenomenon is evident in the development and application of DeepSeek V3, a model that has shown significant promise in coding and testing scenarios.

DeepSeek V3 has been designed to incorporate innovative methodologies, such as knowledge distillation from its predecessor, DeepSeek R1. This process involves transferring reasoning capabilities from the R1 series into standard large language models (LLMs), particularly enhancing DeepSeek V3. The integration of verification and reflection patterns from R1 into V3 has notably improved its performance, allowing for better control over output style and length.

The practical applications of DeepSeek V3 are vast, with a particular emphasis on its coding capabilities. The model is reputed to be highly proficient in generating code, as demonstrated in a test where it was tasked with creating an HTML Space Invaders game. The model’s ability to quickly generate functional HTML and JavaScript code was impressive, showcasing its potential in real-world coding applications.

“This model is supposed to be very good at coding.”

User experience and feedback have highlighted the model’s speed and efficiency. During testing, DeepSeek V3 was able to rapidly iterate on code, making adjustments and improvements based on user input. This adaptability is crucial for developers looking to leverage AI in coding tasks.

“So very, very fast, first and foremost, I gotta say.”

The testing scenarios further revealed DeepSeek V3’s capability to handle complex tasks, such as adding features like power-ups and status indicators in the game. This iterative process demonstrated the model’s ability to maintain consistency while incorporating new functionalities, a testament to its robust coding capabilities.

Overall, DeepSeek V3’s performance in coding and testing scenarios underscores its potential as a powerful tool for developers. Its ability to generate and refine code efficiently makes it a valuable asset in the AI toolkit, promising to enhance productivity and innovation in software development.

Comparative Analysis: DeepSeek V3 vs Other Models

In this section, we delve into a comparative analysis of DeepSeek V3 against other AI models, such as GPT-4 and Llama. The focus is on performance across various benchmarks, highlighting both strengths and weaknesses.

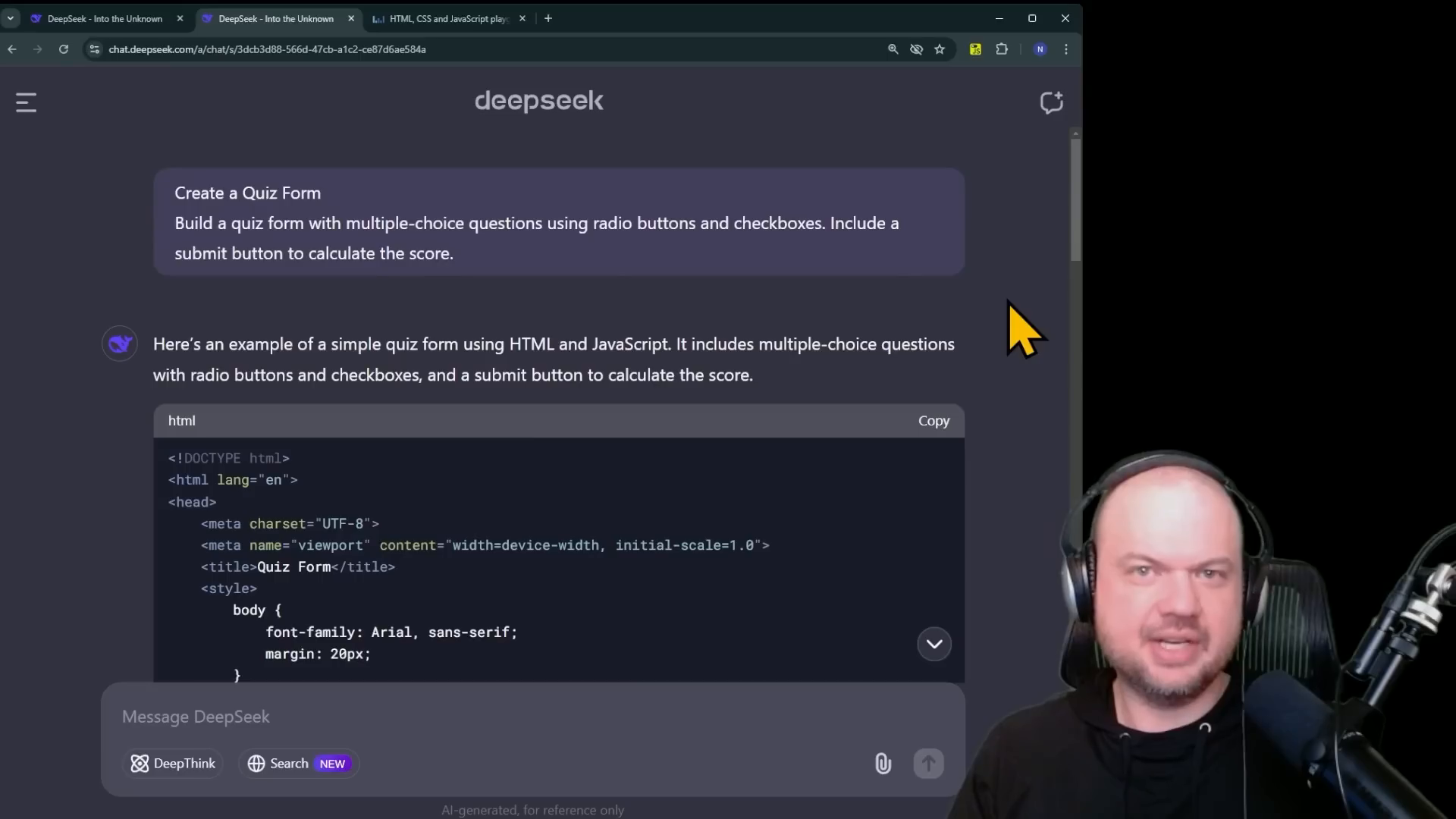

DeepSeek V3 has shown impressive speed and efficiency, making it a strong contender in the open-source AI landscape. It excels in tasks like creating quiz forms using HTML and JavaScript, where it effectively handles multiple-choice questions with radio buttons and checkboxes, providing a simple yet functional output.

DeepSeek v three is looking pretty good. It’s very fast.

However, when it comes to reasoning questions, DeepSeek V3 does not perform as well as some other models, such as the O1 Pro mode. For instance, in a series of reasoning challenges involving logic puzzles and hypothetical scenarios, DeepSeek V3 occasionally struggled to arrive at the correct conclusions. This includes tasks like determining the outcome of a race with distractions or solving logic puzzles involving liars.

It’s not as good at some of these reasoning questions as, for example, the o one pro mode.

Despite these challenges, DeepSeek V3 remains a powerful tool, particularly for users seeking a fast and accessible open-source model. It is available for free on the web and can be run locally, offering a versatile option for various applications.

Conclusion: The Future of AI with DeepSeek V3

The conclusion of our exploration into DeepSeek V3 highlights its potential to challenge existing AI models, both open and closed source, such as Meta’s Llama 3.1. The model’s ability to be trained efficiently and cost-effectively is a significant advancement, indicating a future where AI models are not only more capable but also easier to create and operate.

This is certainly showing that moving forward we’re not just going to have better, more capable models.

The open-source nature of AI is becoming increasingly unstoppable, with the potential for widespread access to advanced capabilities. Although current models are not yet multimodal, this development is on the horizon, promising even greater versatility.

The cost-effectiveness of DeepSeek V3 is particularly noteworthy. With input costs at 27 cents per million tokens and output at $1.10, it stands as a more affordable option compared to other models like GPT-4 and Cloud 3.5 Sonnet, which are significantly more expensive. This affordability makes open-source models attractive to developers, offering a competitive edge over closed-source models from companies like OpenAI and Anthropic.

I don’t think open source AI is gonna be able to be stopped.

The implications for the AI industry are profound. As the cost of creating models decreases, more individuals and companies can enter the space, fostering innovation and competition. This shift could impact major players like NVIDIA, as the demand for more accessible and affordable AI solutions grows.

In summary, DeepSeek V3 represents a pivotal moment in AI development, where efficiency, affordability, and open-source accessibility converge to shape the future of technology. The industry is poised for transformation, and the possibilities are vast.