In the wake of Meta’s content moderation overhaul, Mark Zuckerberg discusses the complexities of censorship and the importance of free speech in today’s digital landscape on the Joe Rogan Experience. This blog outlines the interview discussion between Mark and Joe, looking into content moderation, the pressures from government entities, and the evolving role of social media platforms in shaping public discourse.

Table of Contents

- The Journey of Content Moderation

- The Rise of Censorship

- The 2016 Election and its Aftermath

- The Impact of COVID-19 on Content Policies

- Government Pressure and Censorship

- The Role of Fact-Checkers

- The Slippery Slope of Moderation

- Challenges of Moderating at Scale

- The Future of Content Moderation

- The Importance of Community Input

- The Role of AI in Content Moderation

- The Need for a Balanced Approach

- The Intersection of Technology and Society

- Meta’s Vision for the Future

- FAQs about Meta’s Content Moderation

The Journey of Content Moderation

Content moderation has evolved significantly since the inception of social media. Initially, platforms focused on creating environments that enabled open dialogue and sharing. However, as user-generated content surged, so did the challenges associated with managing that content.

In the early days, moderation was more about managing practical issues like bullying and copyright infringement. The goal was to empower users while ensuring a safe space. Yet, as social media grew, so did the complexity of the issues faced.

Shifts in Focus

Over the last decade, there has been a noticeable shift towards ideological censorship. This change was prompted by significant political events that influenced public perception and regulatory pressures.

The rise of misinformation became a focal point, leading to more stringent content policies. The challenge was balancing the need for free expression with the responsibility to curb harmful content.

The Rise of Censorship

The landscape of censorship changed dramatically in the past few years. Initially, concerns focused on hate speech and harassment. However, the parameters expanded to encompass a broader range of ideological content.

As social media platforms became central to political discourse, the pressure to regulate content intensified. This pressure was not just internal; external entities, including governments, began to influence how platforms approached moderation.

Influence of Political Events

Two pivotal events shaped the current censorship landscape: the 2016 U.S. presidential election and the COVID-19 pandemic. Both events highlighted the role of social media in shaping public opinion and the challenges of misinformation.

These events prompted calls for greater accountability from platforms, leading to a more aggressive stance on content moderation. The interplay between free speech and the need for accuracy became a contentious issue.

The 2016 Election and its Aftermath

The 2016 presidential election marked a turning point in content moderation practices. The emergence of misinformation narratives, particularly surrounding Russian interference, led to a reevaluation of how platforms managed content.

Initially, there was a reluctance to intervene heavily in political discourse. However, as allegations of misinformation grew, platforms began to implement stricter guidelines. This raised concerns about biases and the implications of being the arbiter of truth.

Challenges of Misinformation

The aftermath of the election saw an increase in ideological polarization. The narrative that misinformation significantly influenced voter behavior prompted platforms to take action. However, this often resulted in backlash from users who felt their voices were being stifled.

The challenge lay in distinguishing between harmful misinformation and legitimate discourse. This blurred line complicated the role of content moderators and raised questions about freedom of expression.

The Impact of COVID-19 on Content Policies

The COVID-19 pandemic introduced another layer of complexity to content moderation. Initially, the focus was on public health and safety, leading to the implementation of strict guidelines regarding the dissemination of health-related information.

As information surrounding the pandemic evolved, so did the policies. The rapid changes and conflicting messages from authorities made it difficult for platforms to maintain consistent moderation practices.

Government Influence and Public Health Concerns

During the pandemic, government entities exerted considerable pressure on platforms to regulate information about vaccines and treatments. This created tensions between public health initiatives and the principles of free speech.

While there was a genuine need to combat misinformation, the approach taken by platforms often led to accusations of censorship. The struggle to balance safety with free expression became a focal point of debate.

Government Pressure and Censorship

Government pressure has played a crucial role in shaping content moderation practices. The increasing scrutiny of social media platforms has led to calls for transparency and accountability in how content is moderated.

As governments recognize the influence of social media on public opinion, they often push for stricter regulations. This has resulted in platforms grappling with the need to comply with legal requirements while maintaining user trust.

The Balancing Act

Platforms now find themselves in a precarious position, balancing user safety with the right to free speech. The challenge is further compounded by the diverse perspectives of users worldwide.

As the landscape continues to evolve, the implications of content moderation will remain a critical topic of discussion. The ongoing interplay between government influence, public sentiment, and platform policies will shape the future of digital discourse.

The Role of Fact-Checkers

Fact-checkers play a pivotal role in the landscape of content moderation. Their primary task is to verify the accuracy of information circulating on social media platforms. In an era where misinformation spreads like wildfire, the need for reliable fact-checking has never been more critical.

Platforms like Meta have invested in partnerships with independent fact-checking organizations. These collaborations aim to enhance the credibility of information shared by users. By flagging false claims, fact-checkers help maintain the integrity of online discourse.

Challenges Faced by Fact-Checkers

Despite their importance, fact-checkers face numerous challenges. The sheer volume of content generated daily makes it nearly impossible to review every post. Moreover, the rapid pace at which misinformation spreads complicates their efforts.

Another significant hurdle is the potential backlash from users. When a post is flagged or removed, it can lead to accusations of bias or censorship. This dynamic creates a delicate balancing act for fact-checkers as they strive to uphold accuracy while navigating public sentiment.

The Slippery Slope of Moderation

The journey of content moderation is fraught with complexities. As platforms attempt to navigate the fine line between protecting users and allowing free expression, they often find themselves on a slippery slope.

Many argue that overly aggressive moderation can lead to the stifling of legitimate discourse. When platforms prioritize the removal of content deemed harmful, they risk creating an echo chamber where only certain viewpoints prevail.

Consequences of Over-Moderation

Over-moderation can have unintended consequences. Users may feel alienated and disengaged if they perceive their voices are not heard. This sense of exclusion can lead to a decline in user trust and participation.

Moreover, the potential for bias in moderation practices raises questions about who decides what constitutes harmful content. The implications of this power are significant, as it can shape public discourse and influence societal norms.

Challenges of Moderating at Scale

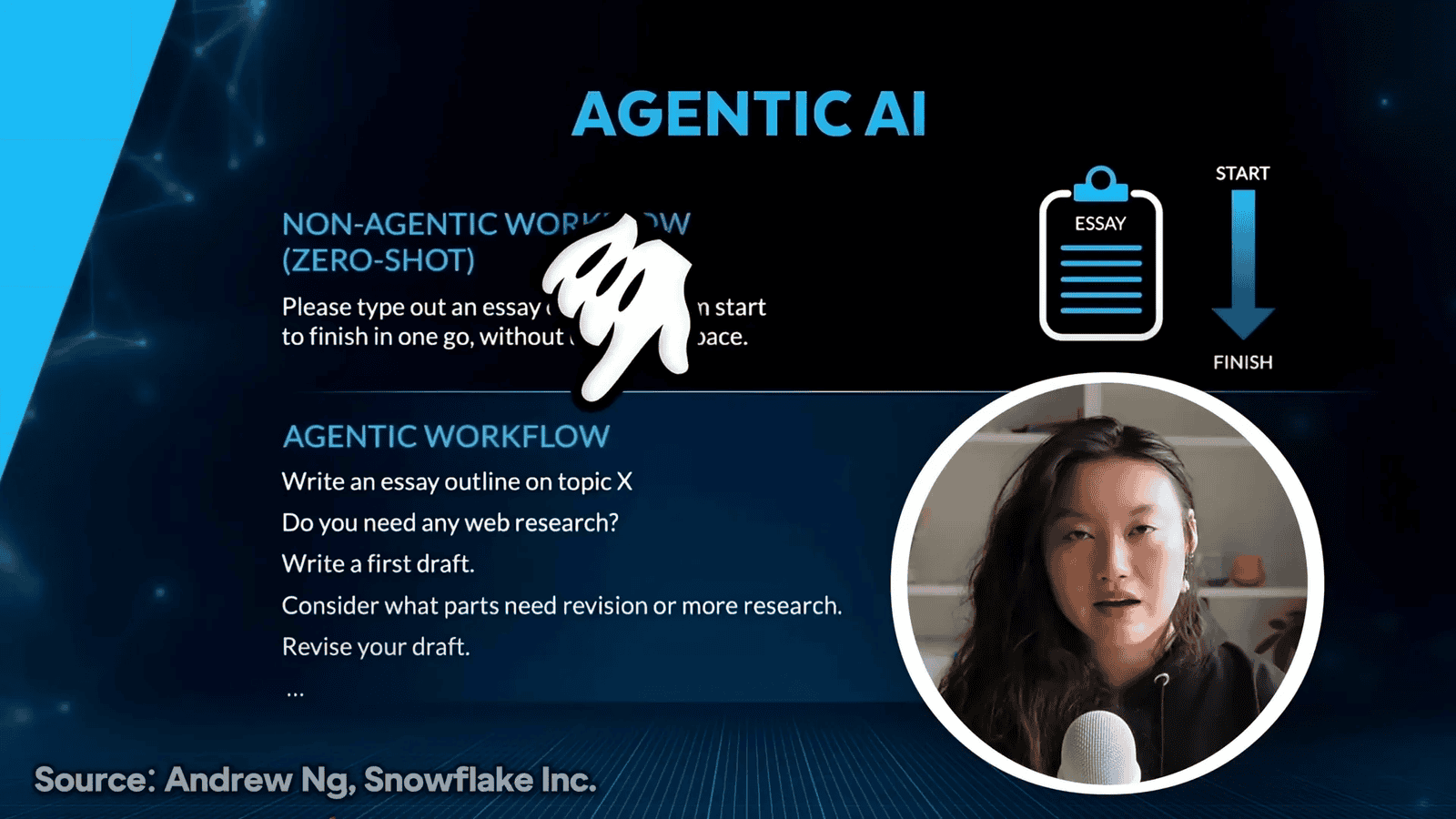

Moderating content at scale presents unique challenges. With billions of users generating vast amounts of content, platforms must leverage technology to keep pace. Automated systems can assist in identifying harmful content, but they are not infallible.

Many platforms employ machine learning algorithms to detect and flag inappropriate material. However, these systems often struggle with nuances, leading to false positives or negatives. This reliance on technology can complicate the moderation process.

Human Moderation: A Necessary Component

While technology plays a crucial role, human moderators remain essential. They can provide context and make nuanced decisions that algorithms may overlook. However, the demand for human moderation is immense, creating a need for more resources and training.

Additionally, the emotional toll on moderators cannot be underestimated. Regular exposure to graphic or distressing content can lead to burnout and mental health challenges. Platforms must prioritize the well-being of their moderation teams to ensure effective content management.

The Future of Content Moderation

The future of content moderation will likely involve a blend of technological advancements and human oversight. As social media platforms continue to evolve, so too will their approaches to managing content.

Emerging technologies, such as artificial intelligence and natural language processing, hold promise for improving moderation practices. These tools can enhance the accuracy and efficiency of content review, allowing platforms to respond more effectively to harmful material.

Adapting to New Challenges

As new challenges arise, platforms must remain agile in their moderation strategies. The ongoing evolution of misinformation tactics requires constant vigilance. Staying ahead of trends and adapting policies accordingly will be crucial for maintaining user trust.

Furthermore, transparency in moderation practices will be essential. Users must feel confident that moderation decisions are made fairly and equitably. This transparency can foster a greater sense of community and shared responsibility among users.

The Importance of Community Input

Community input is vital in shaping effective content moderation policies. Engaging users in discussions about moderation practices can lead to more informed and inclusive policies.

Platforms can benefit from soliciting feedback and allowing users to voice their concerns. This engagement can help identify areas where moderation may be lacking or overly stringent. By fostering dialogue, platforms can build trust and enhance user satisfaction.

Building a Collaborative Environment

Creating a collaborative environment requires platforms to prioritize user education. Informing users about moderation processes can empower them to navigate the platform responsibly. This education can also help mitigate misinformation spread and promote critical thinking.

Moreover, involving community members in the moderation process can lead to more nuanced understandings of context. Users often have insights that algorithms or even moderators may miss, making their contributions invaluable.

The Role of AI in Content Moderation

Artificial intelligence has become a pivotal component of content moderation in today’s digital landscape. By leveraging machine learning, platforms can analyze vast amounts of data quickly, identifying harmful content more efficiently than human moderators alone.

AI systems can flag inappropriate posts, detect patterns of misinformation, and even assess user behavior. This capability allows platforms to respond rapidly to emerging issues, such as hate speech or graphic content.

Benefits of AI in Moderation

- Speed: AI can process and analyze content in real-time, ensuring that harmful material is addressed swiftly.

- Scalability: As user-generated content grows, AI can scale to handle increased volumes without compromising effectiveness.

- Consistency: Algorithms apply the same standards uniformly, reducing the risk of human bias in moderation decisions.

Limitations of AI

While AI offers significant advantages, it is not without its limitations. Algorithms can misinterpret context, leading to false positives where legitimate content is flagged incorrectly.

Moreover, AI lacks the nuanced understanding of human emotions and cultural contexts, which can lead to misunderstandings in moderation. This is why human oversight remains crucial in the moderation process.

The Need for a Balanced Approach

As platforms navigate the complexities of content moderation, a balanced approach is essential. Striking the right equilibrium between user safety and free expression is a daunting challenge.

Overzealous moderation can lead to the suppression of legitimate discourse, while a lax approach may allow harmful content to proliferate. Therefore, a nuanced strategy that incorporates diverse perspectives is vital.

Engaging Diverse Stakeholders

Involving a variety of stakeholders in the moderation process can enhance policy effectiveness. This includes users, advocacy groups, and experts in free speech and digital rights.

By actively engaging these groups, platforms can develop more comprehensive policies that reflect the diverse values and expectations of their user base.

The Intersection of Technology and Society

The relationship between technology and society is deeply intertwined, especially in the realm of content moderation. As platforms evolve, so do societal expectations regarding accountability and transparency.

Users increasingly demand greater insight into moderation practices and the algorithms that drive them. This push for transparency is vital for building trust and fostering a sense of community among users.

Transparency in Moderation Practices

- Clear Communication: Platforms must clearly articulate their moderation policies and the rationale behind them.

- User Education: Informing users about how moderation works can empower them to navigate the platform responsibly.

- Feedback Mechanisms: Implementing channels for user feedback can help platforms refine their moderation strategies.

Meta’s Vision for the Future

Looking ahead, Meta envisions a content moderation landscape that prioritizes safety while upholding free speech. The company aims to leverage advanced technologies and community engagement to create a more balanced approach.

Meta’s content moderation overhaul focuses on refining its algorithms and enhancing human oversight. The goal is to ensure that moderation practices adapt to the evolving needs of users and society.

Innovation in Moderation Techniques

Meta is exploring innovative moderation techniques that incorporate AI and community feedback. By continuously improving these systems, the company aims to address misinformation and harmful content more effectively.

This forward-thinking approach will position Meta as a leader in responsible content moderation, setting a benchmark for other platforms to follow.

FAQs about Meta’s Content Moderation

What is Meta’s content moderation overhaul?

Meta’s content moderation overhaul refers to the company’s comprehensive strategy to enhance its moderation practices. This includes the use of AI, increased human oversight, and community engagement to balance user safety with free speech.

How does AI contribute to content moderation?

AI contributes by automating the identification of harmful content, analyzing user behavior, and flagging inappropriate posts for review. It increases the speed and efficiency of moderation efforts.

Why is a balanced approach necessary?

A balanced approach is crucial to prevent the suppression of legitimate discourse while ensuring user safety. It recognizes the diverse perspectives of users and aims to create an inclusive environment.

How can users engage with moderation policies?

Users can engage by providing feedback on moderation practices, participating in community discussions, and staying informed about policy changes. This engagement fosters a collaborative environment.

This blog post is inspired by the video Joe Rogan Experience #2255 – Mark Zuckerberg. All credit for the video content goes to the original creator. Be sure to check out their channel for more amazing content!